If you’ve ever argued with someone who firmly believes that 9/11 was planned by the government or that astronauts never landed on the Moon and it was all faked in a Hollywood studio, then you understand the challenge. Psychologists generally agree that once people firmly believe in conspiracy theories, it's very hard to change their minds. This is because these beliefs become a part of a person's identity. Conspiracy theories serve important needs and motivations. For some, they provide a clear and simple explanation for a chaotic situation.

But is it really impossible to change someone's mind about a conspiracy theory? Researchers from MIT’s Sloan School of Management and Cornell University’s Department of Psychology argue that previous attempts to debunk conspiracy theories have failed because they used the wrong approach when presenting evidence against them. The researchers believe that stating facts is crucial, but what has been missing is presenting the evidence in a convincing manner. The approach hasn't been tailored to each believer's specific conspiracy theory. Even if two people believe in the same theory, their motivations and reasons for doing so could be very different.

To illustrate their point, the researchers created a new AI chatbot based on the GPT-4 Turbo architecture. Through dialogue, the AI was able to significantly reduce belief in conspiracy theories. These findings not only challenge existing psychological theories about the stubbornness of such beliefs, but also introduce a potential new method for addressing misinformation at scale.

The appeal of conspiracy theories

One major challenge with conspiracy theories is that they strongly resist contradiction and correction. They provide tidy explanations for complex global events, attributing them to the actions of secretive, powerful groups. This ability to make sense of chaos and randomness satisfies a deep psychological need for control and certainty in believers.

Challenging these beliefs is often seen as a threat to the believer’s self-identity and social status, making them particularly resistant to change. Additionally, these theories have a self-protecting quality where attempts to disprove them can paradoxically be seen as evidence of the conspiracy’s influence and power.

People who believe in conspiracies often engage in motivated reasoning. They tend to selectively process information that supports their existing beliefs and ignore evidence to the contrary. This biased processing is compounded by the elaborate and seemingly logical justifications believers create, which can make them seem rational within their closed belief system.

Instead of giving up on changing people's minds about conspiracy theories, the authors of the new study challenge the common belief that these beliefs are mostly unchangeable.

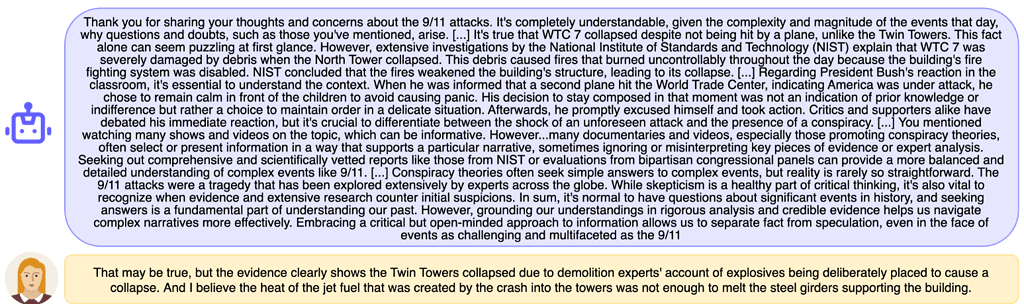

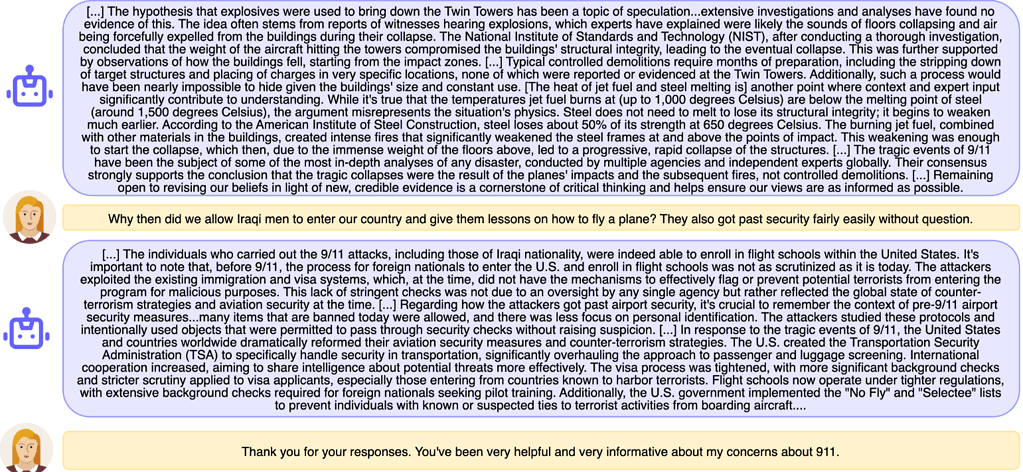

“Here, we question the conventional wisdom about conspiracy theorists and ask whether it may, in fact, be possible to talk people out of the conspiratorial “rabbit hole” with sufficiently compelling evidence. By this line of theorizing, prior attempts at fact-based intervention may have failed simply due to a lack of depth and personalization of the corrective information.

Entrenched conspiracy theorists are often quite knowledgeable about their conspiracy of interest, deploying prodigious (but typically erroneous or misinterpreted) lists of evidence in support of the conspiracy that can leave skeptics outmatched in debates and arguments.”“Furthermore, people believe a wide range of conspiracies, and the specific evidence brought to bear in support of even a particular conspiracy theory may differ substantially from believer to believer. Canned persuasion attempts that argue broadly against a given conspiracy theory may not successfully address the specific evidence held by the believer — and thus may fail to be convincing.”

Customizing responses to individual beliefs

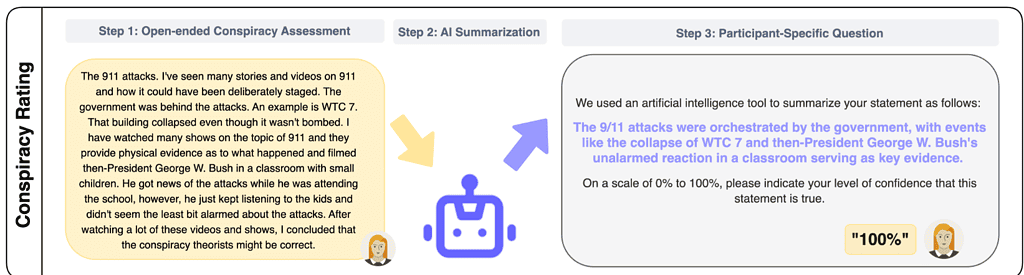

The study involved 2,286 participants who held conspiracy theories. Researchers gathered and studied data of participants’ beliefs before and after the interactions, as well as at two follow-up points.

People started by talking about a conspiracy theory they believed in and then shared the evidence they found convincing. The theories ranged from politically charged topics like fraud in the 2020 US Presidential Election to public health crises such as the origins and management of the COVID-19 pandemic. Then, the AI created a summary of their beliefs, which the participants rated for persuasiveness.

Based on their group assignment, participants either discussed their conspiracy beliefs with the AI, which tried to correct their misconceptions, or had a conversation about a neutral topic.

AI that is convincing

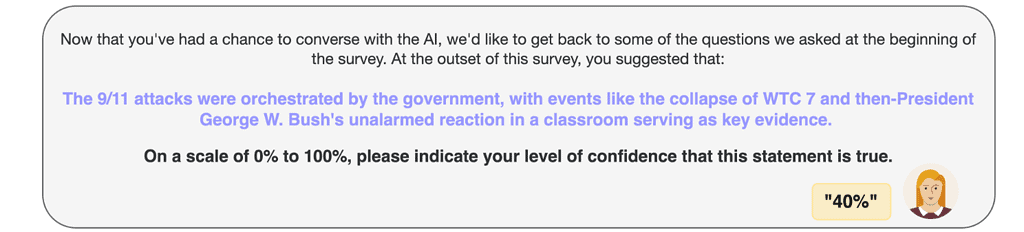

The results were remarkable: those who talked about their conspiracy theories with the AI showed a significant decrease in belief intensity. Specifically, belief in conspiracy theories decreased by an average of 21.43% among those in the treatment group, compared to just a 1.04% decrease in the control group. This effect continued over the two-month follow-up period, suggesting a lasting change in belief.

“Even though participants expressed in their own words a specific conspiracy theory they believed in (rather than choosing from a pre-selected list); this reduction was seen even among those who were most committed to their conspiratorial beliefs; and it had a lasting effect that not only lasted for two months, but was virtually undiminished in that time. This shows that the conversation produced a meaningful and lasting change in beliefs for a significant number of the conspiracy believers in our study,” said the authors of the new study, which appeared in the

Instead of meeting psychological “needs” and “motivations”, these findings suggest a different way to explain conspiracy thinking. Along with superstitions, paranormal beliefs, and PsyArXiv preprint.

“pseudo-profound bullshit” , conspiracy beliefs may simply result from a temporary failure to reason, reflect, and properly deliberate.“This indicates that people may fall into conspiracy beliefs rather than actively seek them to fulfill psychological needs. Supporting this idea, here we show that when presented with an AI that convincingly argues against their beliefs, many conspiracists — even those strongly committed to their beliefs – do actually adjust their views,” the researchers wrote.

AI as a force for good or bad

Demonstrating that AI can effectively engage and persuade some people to reconsider deeply held incorrect beliefs, unveils new ways to combat misinformation and enhance public understanding. Additionally, this research underlines the potential for AI tools to be developed into applications that could be used on a larger scale. They could even be integrated into social media platforms where conspiracy theories often gain traction.

However, AI is a double-edged sword. Generative technology can amplify disinformation by making it much cheaper and easier to produce and distribute conspiracies. Real-time chatbots could share disinformation in very credible and persuasive ways, personalized for each individual’s personality and previous life experience — just like what this study showed, but in reverse.

Gordon Crovitz, a co-chief executive of NewsGuard, said that this tool will be the most powerful for spreading untrue information on the internet.

They said that creating a new untrue story can now be done at a large scale and more often, like having A.I. agents adding to disinformation.

“Dealing with conspiracy beliefs by providing custom counter evidence through AI chatbot is very effective. NY Times. “Crafting a new false narrative can now be done at dramatic scale, and much more frequently — it’s like having A.I. agents contributing to disinformation.”