Let’s get it out of the way first: The new iPhone 11 Pro camera has the best overall features for a smartphone I’ve used. That may change when Google releases the Pixel 4 next month, but for now, the iPhone 11 Pro is the champ. And while I have no problem giving credit where it’s due, I’m left with some extremely complicated feelings about Apple cameras. Even beyond a few notable drawbacks, I wish it was simpler to know what’s actually going on inside the camera.

If you only hope to point your phone at a subject and get usable—and often pretty impressive—shots, then the new iPhone camera is unbeatable. But if you already know how to use a camera—even on a previous iPhone—there’s a learning curve that might change at any time thanks to software updates, and it could have a real effect on the look of your photos and videos. Plus, the 11 Pro is a reminder that iPhone photography—and smartphone shooting on the whole—is increasingly different than typical photography.

This post has been updated. It was originally published on 9/24/19.

The hardware in the iPhone 11 Pro camera

The iPhone 11 Pro comes with a trio of rear-facing cameras, including the familiar wide-angle and telephoto lens modules, as well as a new ultra-wide camera. If you’re serious about taking pictures and video and that guides your phone choice, you might as well jump on the Pro line and get an all-in-one package.

The lens selection makes sense for photographers. Professionals typically concentrate on three basic types of shots, including wide photos to set the scene, tight shots to provide intricate details, and standard middle shots to handle the bulk of the storytelling. Switching between the different camera modules on the 11 Pro makes this pretty straightforward and may even encourage some shooters to expand the kind of photos they capture. I can get behind that.

Shooting with each camera provides its own unique strengths and challenges, so let’s break them down individually. You can check out high-res versions of all the comparison shots against the iPhone 11 Pro camera photos here.

The wide-angle

The iPhone 11 Pro’s main camera remains the standard wide-angle; it’s by far the most useful and also the most advanced. There’s a new sensor inside that seems to have improved the performance in low natural light. It’s hard to gauge, however, because the overall picture-taking process relies so heavily on the software tweaks. Apple has increased the maximum ISO (the rating that indicates the camera’s perceived sensitivity to light) from roughly 2,300 up to about 3,400. Raising that setting typically increases ugly noise that shows up in your photos, so Apple is either more confident in the sensor’s inherent low-light chops or it has added more processing firepower to fix it later. The reality is probably a mixture of the two.

How the iPhone 12 Pro Max camera stacks up against previous designs]

The lens maintains the same basic specs, including an f/1.8 aperture. I wouldn’t expect it to get much faster than that (lower numbers indicate wider apertures to let in more light, making the lens “fast”).

The telephoto lens

Telephoto lenses have been showing up on iPhone flagships since the 7 Plus when we first encountered the Portrait mode. The telephoto lens gives shooters an angle of view equivalent to what you’d expect out of a roughly 52mm lens on a full-frame DSLR. Though that makes it a telephoto lens by the strict definition, photographers typically expect such lenses to fall somewhere north of at least 70mm. The extra reach allows shooters to pick their way through complicated environments and single out a subject. The iPhone’s telephoto does a better job of this than the wide-angle, but it’s not going to replace a dedicated longer lens.

The sensor inside the 52mm is still smaller than it is in the main wide-angle lens, but the maximum aperture goes from f/2.4 to f/2. Letting in more light is almost always welcome, but it’s a relatively small jump. So, if you’re expecting a huge change in the natural blur or low-light performance, you might be disappointed. It does, however, seem to have upped the focusing speed, which it desperately needed.

The iPhone 11 Pro Camera’s super-wide lens

This post has been updated.

At a roughly 13mm equivalent, the super-wide lens is a new addition for the iPhone camera ecosystem. Like the telephoto, its sensor is smaller than the main camera’s, and it doesn’t have access to some of the other features like the new iPhone 11 Night Mode (lots more on that in a moment), Portrait Mode, or optical image stabilization. Thankfully, super-wide lenses don’t suffer as much from camera shake, which makes the lack of lens-based stabilization less of an issue.

Some critics immediately discounted the new wide-lens as a novelty, but 13mm isn’t much wider than the wide-end of the 16-35mm zoom lens that photojournalists have been using for decades. Will people overuse it? Oh yeah. But it’s not inherently a special effects lens like a true fisheye.

The camera app bug

Before getting into the features, it’s worth noting that some users (including me) have encountered an issue with the iOS 13 camera app in which the screen stays black for up to 10 seconds before you can actually do anything. The iOS 13.1 update is inbound and hopefully, that will fix it, but it’s seriously annoying when it happens.

Night Mode

Last year, when Google introduced its Night Sight mode on the Pixel 3 smartphone, I was genuinely impressed. The company used the device’s single camera to capture a number of low-light shots in quick succession. It then pulled information from those shots and mashed them into one finished image that was impressively bright, relatively accurate color-wise, and surprisingly full of details that a typical high-ISO photo would obliterate with digital noise.

The iPhone 11 Pro camera answers Night Sight with its own Night Mode, which uses a similar multi-capture strategy and immediately became a fundamental piece of the shooting experience. Point the iPhone camera at a dark scene and a Night Mode icon pops up automatically. You can turn it off if you want to, but you have to consciously make that choice.

The first time you see it, it’s like witchcraft: It brightens up the dark scene to the point where every object in the picture is recognizable. If you’re expecting it to create what you’d get with even the most basic pop-up flash, however, you might be disappointed. Night Mode is great in situations where the light is relatively flat.

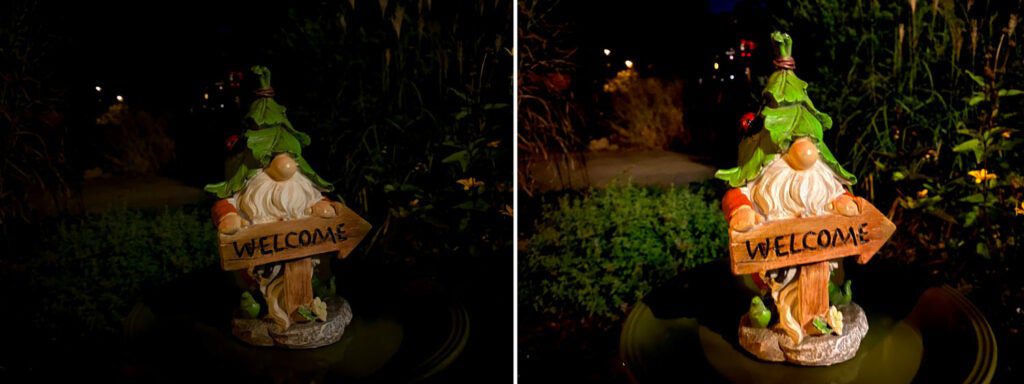

It’s not always magic, though. Take Night Mode into a situation with mixed or highly-variable lighting conditions and things can get out of hand. Consider, for instance, the picture of a garden gnome above. I turned night mode off for the first version and was pleasantly surprised at how much detail I got and how sharp the image is. With Night Mode on, however, the sharpness is still solid, but the colors get bonkers.

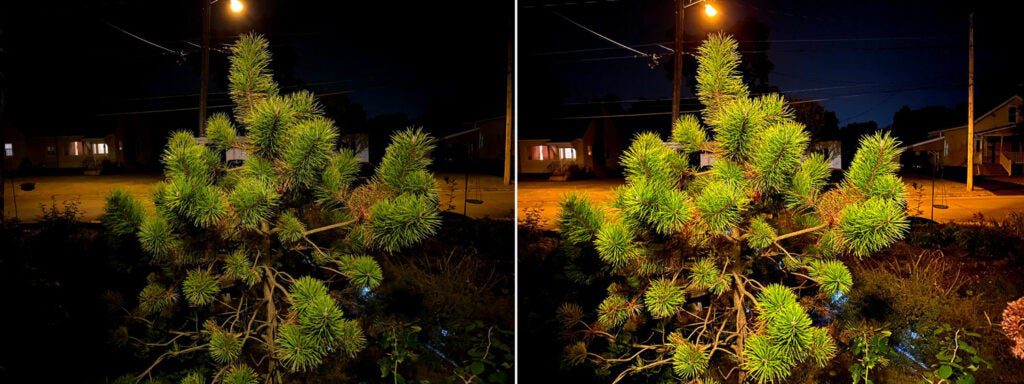

You can observe the same phenomenon here with this bush. It has the subtlety of a sledgehammer. Honestly, it reminds me of the overbearing processing that Samsung typically applies to its photos. Because Night Mode is on by default, it can be hard to fight through the initial urge to leave it on, even when you don’t need it. The picture pops up on screen, and you push the shutter because it’s so nice and bright.

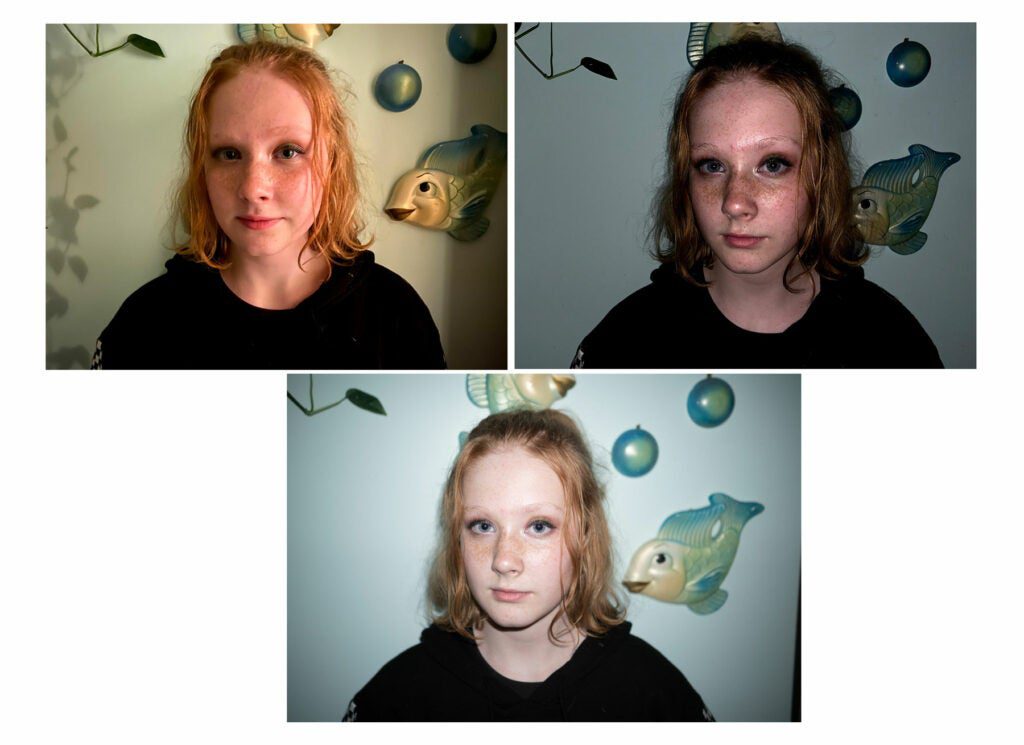

When it comes to skin tones, the same flat-light rule applies. In this example, there’s one dim tungsten beam coming from camera right. Without night mode, it was totally unusable. The frame either came out black or absurdly blurry due to the long exposure required.

In mixed-light conditions, however, things get more complicated. Few things make a person look less appealing in a photo than blending colors on their face. With Night Mode turned on, it can sometimes augment the effect by amplifying the light sources, making the subject look wacky and cartoonish. Unfortunately, that’s a pretty common scenario in places like bars, weddings, or events. Apple has already done considerable work to make the skin tones look more natural, but I expect this will improve over time.

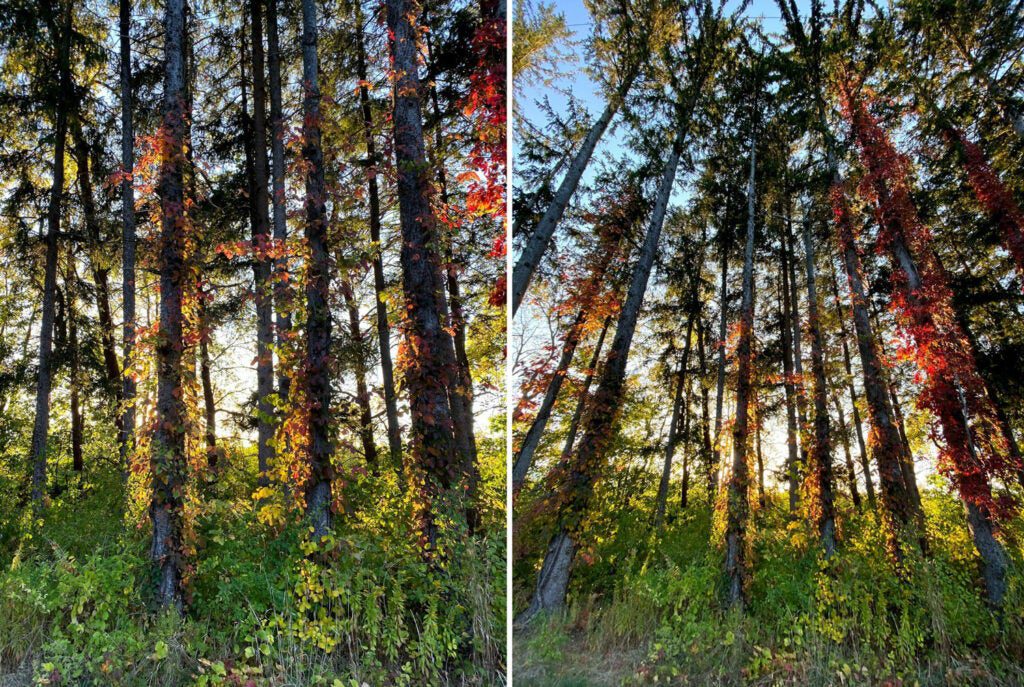

Generally, I’d love to see Night Mode tone it down a bit. Take this example of these flowers under an ugly street light in the park for instance. The Night Mode version (on the right), ironically, takes away almost any indication that the photo was taken at night.

I would also like a little bit more freedom to experiment with it. Night Mode is capable of capture times all the way up to 30 seconds, but you can only access the full time if you put the iPhone on a tripod or something steady and it senses the lack of motion. I’m fine with a recommended length, but I’d like the opportunity to mess up the photos however I want. (Taking screwed-up shots is how you learn, after all.) Also, given that it’s kind of unclear if there’s any direct relationship between the length of the exposure and the overall brightness or characteristics of the image, I’d at least like to easily mess with the capture time and see what I can glean. That is, of course, until the next software update, which could change it completely.

A quick note about flash

I have seen some folks talk about how Night Mode makes flash obsolete. That’s mostly true if we’re talking about smartphone flashes, which are little more than glorified LED flashlights. Take a look at this grid of images.

Not only does the actual flash from the Sony create much higher overall image quality and sharpness (thanks to the short duration of the flash that doesn’t leave room for camera shake), it would also overpower any possible mixed-light temperature situations that you might encounter. In short, if you were thinking about getting a real camera because you can use flash, Night Mode shouldn’t change that at all.

Making tricky choices

Manual exposure modes in smartphone cameras have always been a scam with fixed apertures and a lack of long-exposure functions. Still, it’s still good for users to know what the camera is doing—or have the option to. The iPhone 11 Pro camera makes a lot of choices that it doesn’t tell you about.

For starters, when you press the 2x zoom button, you’d probably assume you’re going to be using the telephoto camera that literally doubles the focal length of the lens you’re using. But most of the time, like the iPhone XS, you’re actually just enabling 2x digital zoom on the main wide-angle camera. If you’re familiar with camera lenses, you know that shooting with a 52mm lens is fundamentally different than shooting on a 26mm lens and cropping, especially when it comes to the field of view and the relationship between objects in your frame.

Above, you’ll find a picture of a transaction happening at the excellent Troy, NY, farmer’s market. I pressed the 2x zoom on this image because it was plenty bright (the camera selected ISO 32) and I wanted to get as much natural blur as possible in front of and behind the subjects. It would also make the field of view easier to manage to crop out distractions like the guy in the sweatshirt on the left. It wasn’t until after I got back home that I realized the 2X zoom button simply cropped a picture from the wide-angle camera. It didn’t optically zoom—it digitally zoomed. As a result, the extrapolated image has some ugly artifacts and sharpening noise in it when I don’t think it should. I pushed a button for something I wanted and got something else.

Even though the portrait lens has a wider aperture now and the sensor has improved, I found that the 52mm doesn’t focus very close. This picture of some tomatoes, for instance, didn’t allow me to use the true telephoto lens because it couldn’t focus close enough to make the composition work. I would have had to leave a third of the bottom of the frame empty and then crop in post.

A similar effect comes into play with long exposures. Night Mode captures images for 30 seconds at a time, but it’s not a 30-second exposure—it’s just 30 seconds spent capturing shorter photos. The iPhone 11 Pro camera is technically capable of long exposures up to one second, but if you try to use that feature in an app like Halide or Lightroom, the camera is so slow and jittery that it’s unusable.

Use Night Mode long enough and you’ll get a feel for what the difference between a five-second shot and a 30-second shot looks like. Still, it hard to quantify it, and if it changes with the next software update or smartphone, you’ll get to figure it out again.

Where to start with the iPhone 11 Pro camera

Your head might be spinning with all these options and caveats, so let’s track back a little. What is it exactly that you want to shoot? I, for one, picture a photo in my head before I even pick up the camera—smartphone or otherwise. Dig into the so-iconic-it’s-cliche Ansel Adams book The Print and you’ll find a perfect summary of how photographers visualize a photo, then use the tools at their disposal to make it happen. After all, you didn’t really have a choice with film because you couldn’t preview it.

The iPhone—and pretty much any other digital camera—shows you a picture so that it’s as simple as saying, “OK, camera, that’s fine.” Now that the iPhone 11 Pro includes a super-wide angle camera, you can feel its effects even before you select it from the camera app.

The section of the camera app behind the shutter button and at the top of the phone are now translucent when you use the regular wide-angle camera. It has its uses, like when there’s a distracting minivan parked at the edge of a street you’d like to capture, and you want to preview exactly how wide you can make your picture while still leaving the car out of the frame.

Personally, though, I find the whole effect extremely distracting. It’s not so bad when you’re looking directly at the phone—but when you’re trying to compose a shot at a weird angle, it can be hard to tell where the picture you’re taking ends and the extra stuff behind the translucent veil begins. On a few occasions, I’ve mis-framed shots because it threw me off. I’m sure I’ll learn to use it effectively eventually, seeing that the iPhone is teaching me about how to be a better iPhone photographer all the time.

In the menus, you can actually tell the camera to capture image information outside the view of the normal wide-angle camera and store it for a month in case you realize you hated your initial composition and want to go back and change it. I left this turned off, but if you’re just learning how to compose photos and you want more flexibility, it could be a good learning tool.

Once you actually select the super-wide lens, you’ll likely find a scene that you find “striking.” At a 13mm equivalent, you can capture the entirety of a skyline and a massive field of clouds above it. Or, you can cram the entirety of a scene—especially a small one—into a single picture without having to take multiple images or make a panorama.

In general, I love having it as an option. Yeah, it’s wider than the standard lens, but it also makes objects in the frame appear to have more distance between them. So, when you’re trying to show how large and crowded a farmer’s market is, it makes a huge difference beyond the angle of view.

The same goes for nature and landscape photos. I couldn’t get this whole tree and the trademark landscape from Thacher Park in Upstate New York in a single frame with the standard lens. The super-wide handled it with no problem, though.

With that width, however, comes iPhone distortion—and the 11 Pro works too hard to correct it. Here’s a picture of a metal gate in which all the slats were relatively straight. You can see the distortion poking in, especially at the edges.

You’ll also notice it in the photo above that includes a skyline—the buildings lean hard toward the center of the frame. The app does warn you though if you put a person near the edge of the frame because it knows that the distortion will make them look stretched, squished, and bad in general.

Another drawback that I’m somewhat to blame for: My finger got in the way in quite a few of my wide-angle shots when I tried to hold the phone normally. The lens is so wide that it will easily catch your digit intruding on the frame if you’re not careful. So, I switched to holding the iPhone by the top and bottom instead of from the sides like I used to. Another lesson learned.

If you want a similar effect without the smaller sensor issues, you can opt for an accessory lens like the Moment Wide Angle, which modifies the look of the lens on the main camera.

The iPhone 11 Pro camera’s Portrait Mode

Portrait Mode has come a long way since its start and it’s better on the iPhone 11 than it ever has been, at least in the Apple ecosystem. I’ve typically liked Google’s treatment of the fake blur on the Pixel camera slightly better because it looks more natural to me. The 11 Pro, however, seems considerably quicker than pretty much any portrait mode I’ve used when it comes to finding focus and taking the picture.

The blur can still look unnatural if you shoot something too complicated—I used it on a picture of some horse sculpture made of sticks and it created a real mess—but Portrait Mode is still very solid for headshots. The selective sharpening can get overbearing since it looks for specific elements like hair in an image. The beard hair in the selfie I took above has too much detail for my taste. A little blur can really help someone like me look more appealing in a photo.

I’m generally not much of a fan of the lighting effects, but that’s mostly personal snobbery. The Natural light effect flattens the light too much for me, which makes for a very odd contrast against the over-sharpened features. The rest of them still feel like novelties to me, though they are improving. Still, if you want a black-and-white version of your photo, I think you’re better off converting it in an app like Filmborn or Lightroom later. Or just slap an Instagram filter on it.

Lens flare

The lenses on the 11 Pro are obviously larger than those in the XS Pro. Larger lenses typically increase image quality, and that’s generally the case here, but I noticed way more lens flare with this model. Some of it is pretty gnarly.

The above photo shows one of the worst instances from my test run. The bright sign in the back is a digital screen. It’s a commonplace for tourists in my area to take photos because the historic theater often hosts big Broadway shows and national acts. You can see in the photo that a flipped image of what appeared on the screen appears as a ghost in the image itself. This typically happens when photographers put a filter of the front of their lens; the light hits the front glass of the lens itself, bounces back into the filter, and an inverted reflection shows up in the picture. You can typically fight it by removing the filter, but that’s not an option with the iPhone.

The front glass on the iPhone 11 Pro cameras do a great job protecting the lenses, but they seem to flare a ton. Even when it’s not as bad as the theater sign, it’s easy to catch a bright light in the frame that sends weird elements shooting across the picture.

Some people like lens flare. J.J. Abrams has made a whole aesthetic out of it. But there are some major negatives to the distortion. First, flare reduces overall contrast in parts of your photo. Second, the flare itself illustrates a lens quirk that users typically don’t see called “onion ring bokeh”—an effect that stems from the aspherical lens elements inside the lens. It also occurs in standalone cameras, especially on lenses that try to cram a lot of glass and refractive power into a small body. You can recognize it by looking in the flare or the out-of-focus highlights in a photo for rings inside the blurry blobs.

You typically don’t see the iPhone camera’s real bokeh because the lenses are wide and the sensors are small, which downplays the amount of natural blur and allows the hardware to add its own effect with Portrait Mode. If you want to see it for yourself, however, a scene like this dewy grass in morning light illustrates it perfectly. Focusing the lens super close and providing lots of bright details causes all those little onion rings to pop out of the scene. Maybe you wouldn’t notice it, but once you do, it’s always there (almost like an eye floater). In the iPhone 11 Pro, it shows up more in the flare as well.

On top of the onion rings, I’ve noticed some other weird flare effects. This basic picture shot with the ultra-wide camera in a mall parking lot has a flare with decidedly red, green, and blue streaks. And if you don’t wipe the lenses off regularly before taking a picture, you can expect considerable haze over the images (but you should be wiping your camera lens regularly anyway).

I don’t think this flare is a huge issue or a deal-breaker, but it’s definitely something to keep in mind as you’re shooting with the iPhone 11 Pro.

The verdict on the iPhone 11 Pro camera

As I said at the start, I think the 11 Pro camera is the best smartphone camera package around at the moment. If you’re expecting it to compete with a dedicated camera, though, that’s still not the case, especially because it makes photos still look Apple-y. Of course, with software updates that may change. I wholeheartedly think the new camera is better than the iPhone XS, which really seemed to overdo it on the HDR effect that sometimes made people look orange and high-contrast situations look like a video game. But, under many lighting conditions, you probably won’t notice an enormous difference (barring Night Mode).

The iPhone 11 Pro photos look better than the iPhone XS photos overall, and the flexibility of the super-wide angle lens makes it a very substantial upgrade. But it’s not a revolution.

You’ll also have to accept some minor quirks. For instance, the iPhone camera reportedly increases sharpening on hair, including facial hair. The effect accentuates frizzy flyaways and makes details look too sharp. It sounds weird given that sharpness is typically a good feature in photos, but tell that to the weird artifacts that become painfully obvious in the final captures.

Even as you adjust the exposure settings in the iPhone camera app, the brightness of certain elements will change, while others won’t really budge. So, if you’re taking a picture of natural scenery in front of a blue sky, raising or lowering the exposure will make the greenery brighter or darker, though the sky will stay a very similar shade of blue that’s hard to blow out, even if you try. The iPhone camera knows what’s in the scene and is trying to maximize your chances of taking a good photo, even when you kind of want to take a bad one.

What’s next?

Have you heard about the specter of Apple tech called Deep Fusion reportedly coming later this Fall? The AI-powered software will reportedly change the iPhone’s photo and video performance drastically again. And it won’t stop there. Once you learn to shoot with an iPhone camera, there’s a good chance you’re going to need to learn it all over again in a generation or two. Or you could just accept the iPhone’s suggestions and shoot like it wants you to. It makes life a lot easier—and technicolored.