By DAVID KLEPPER (Associated Press)

WASHINGTON (AP) — There are concerns about deepfakes and disinformation driven by artificial intelligence. Worries about political campaigns and candidates using social media to spread false information about elections. Fears that technology companies will not address these problems as their platforms are utilized to undermine democracy before crucial elections.

These are the concerns facing elections in the U.S., where the majority of voters speak English. However, for languages such as Spanish, or in numerous countries where English is not the primary language, there are even fewer protections in place to shield voters and democracy from the damaging effects of election misinformation. This issue is receiving renewed attention in an election year with a higher voter turnout than ever before. dozens of nations where English isn’t the dominant language, there are even fewer safeguards in place to protect voters and democracy against the corrosive effects of election misinformation. It’s a problem getting renewed attention in an election year in which more people than ever will go to the polls.

Technology companies in countries like the U.S. and the European Union have experienced significant political pressure to demonstrate their commitment to addressing the unfounded claims, hate speech, and authoritarian propaganda that pollute their platforms. However, critics argue that they have been less responsive to similar concerns from smaller countries or minority language speakers, reflecting a historical bias towards English, the U.S., and other western democracies. Recent changes at technology companies — layoffs of content moderators and decisions related to misinformation policies — have exacerbated the situation, even as advancements in technology such as artificial intelligence make it increasingly simple to create realistic audio and video that can deceive voters. These disparities have created opportunities for candidates, political parties, or foreign adversaries aiming to sow electoral turmoil by targeting non-English speakers — including Latino voters in the U.S. or millions of non-English-speaking voters in countries like India.

Recent changes at technology firms — layoffs of content moderators and decisions to address misinformation rollback some policies — have only compounded the situation, even as new technologies like artificial intelligence make it easier than ever to create lifelike audio and video that can deceive voters. “If there’s a significant population that speaks another language, you can bet there’s going to be disinformation targeting them,” said Randy Abreu, an attorney at the U.S.-based National Hispanic Media Council, which established the Spanish Language Disinformation Coalition to monitor and identify

misinformation targeting Latino voters

in the U.S. “The power of artificial intelligence is now making this an even more frightening reality.” Many of the major technology companies frequently highlight their efforts to safeguard elections, not only in the U.S. and E.U. This month, Meta is introducing a service on WhatsApp that will enable users to report potential AI deepfakes for review by fact-checkers. The service will support four languages: English, Hindi, Tamil, and Telugu. Meta claims to have teams monitoring for misinformation in multiple languages, and the company has announced additional election-year policies for AI that will have global application, including mandatory labels for deepfakes and political ads created using AI. However, these regulations have not been implemented, and the company has not indicated when they will commence enforcement.

The regulations governing social media platforms differ by country, and critics of technology companies assert that they have been quicker to address concerns about misinformation in the U.S. and the E.U., which has recently enacted

. Tech companies often give the same basic response to other countries, and it's not good enough, according to a study by the Mozilla Foundation.

The study examined 200 different policy announcements from Meta, TikTok, X, and Google (the owner of YouTube) and discovered that almost two-thirds were focused on the U.S. or E.U. The foundation found that actions in these areas were more likely to involve significant investments of staff and resources, while new policies in other nations were more likely to depend on partnerships with fact-checking organizations and media literacy campaigns. new lawsdesigned to address the problemOdanga Madung, a researcher from Nairobi, Kenya, who conducted the study for Mozilla, said it became clear that the platforms’ focus on the U.S. and E.U. comes at the expense of the rest of the world.

“It’s a glaring travesty that platforms blatantly favor the U.S. and Europe with excessive policy coddling and protections, while systematically neglecting” other regions, Madung said.

Failing to focus on other regions and languages will increase the risk of election misinformation misleading voters and impacting election results. These claims are already circulating worldwide.

In the U.S., voters who speak languages other than English are already encountering a wave of misleading and baseless claims, Abreu said.

Claims that target Spanish speakers

, for example, include posts that exaggerate voter fraud or share false information about casting a ballot or registering to vote. Disinformation about elections in Africa has increased ahead of recent elections, according to a study this month from the Africa Center for Strategic Studies, which identified dozens of recent disinformation campaigns — a four-fold increase from 2022. The false claims included baseless allegations about candidates, false information about voting, and narratives designed to undermine support for the United States and United Nations.The center found that some campaigns were carried out by groups allied with the Kremlin, while others were led by domestic political groups.

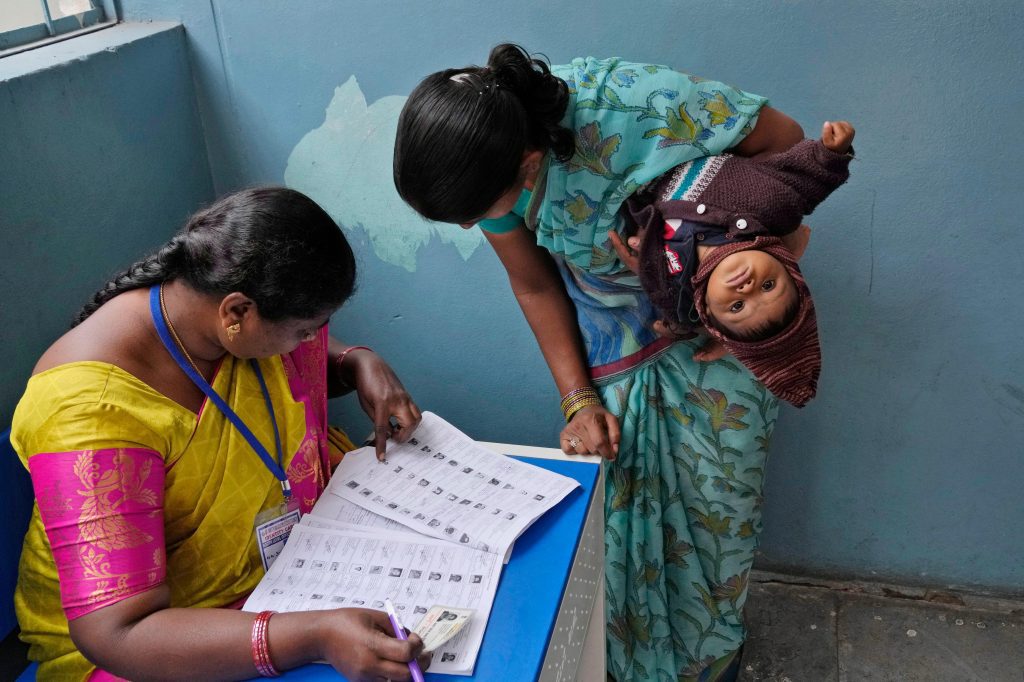

India, the world’s largest democracy, has more than a dozen languages each with more than 10 million native speakers. It also has more than 300 million Facebook users and nearly half a billion WhatsApp users, the most of any nation. Fact-checking organizations have become the first line of defense against viral misinformation about elections. The country will hold elections later this spring, and already voters looking online for information about the candidates and issues are inundated with false and misleading claims. Among the latest are: video of a politician’s speech that was carefully edited to remove key lines; years-old photos of political rallies passed off as new; and a fake election calendar that provided the wrong voting dates.

Tech companies' lack of significant action has prompted voter advocacy groups to join forces, said Ritu Kapur, co-founder and managing director of The Quint, an online publication that recently partnered with several other outlets and Google to create a new fact-checking effort called Shakti.

“False and misleading information is spreading quickly, helped by technology and supported and financed by those who will benefit from it,” Kapur said. “The best way to fight the illness is to come together.”

Deceptive information about the election will be a difficult problem this year as billions of individuals in numerous countries go to vote.

Among the latest: video of a politician’s speech that was carefully edited to remove key lines; years-old photos of political rallies passed off as new; and a fake election calendar that provided the wrong dates for voting.

A lack of significant steps by tech companies has forced groups that advocate for voters and free elections to band together, said Ritu Kapur, co-founder and managing director of The Quint, an online publication that recently joined with several other outlets and Google to create a new fact-checking effort known as Shakti.

“Mis- and disinformation is proliferating at an alarming pace, aided by technology and fueled and funded by those who stand to gain by it,” Kapur said. “The only way to combat the malaise is to join forces.”