In March, the dating app Bumble banned guns. Daters swiping left or right on each other’s alluring poses now aren’t allowed to use a photo of themselves holding a firearm on their profile. The ban came over a year after the service put a restriction on shirtless bathroom mirror selfies. “We wanted Bumble to feel truly like a dating app,” says Alex Williamson, Bumble’s chief brand officer, as to why they don’t allow those not-so-classy shirtless bathroom mirror selfies. That type of photo, after all, exudes a hook-up vibe, which is not what they’re going for at Bumble, Williamson says. But how to enforce it?

In addition to employing more than 4,000 human moderators, the company turned to machine learning to look for those guns and six-pack photos. “You hold a gun in a certain way, and you hold your phone in a certain way in front of a mirror to take a selfie,” says Williamson, explaining how it works, “and that’s how the machine learning picks up on that.”

To some, artificial intelligence and machine learning (a subfield of AI) seem scary. Pop culture has bombarded us, repeatedly, with the same silly rise-of-the-machine-type scenarios. The fact that at a recent Google event the company revealed an AI-driven voice system that can convincingly make human-sounding phone calls to restaurants and hair salons probably didn’t help. Nor does the fact that Elon Musk takes a dark view of the potential dangers of the technology. But, the simple fact is that you’re already encountering AI all the time in your regular life. It’s taking on futuristic tasks like helping self-driving cars see objects like traffic lights, but it’s also behind more everyday services you might encounter, including helping you decide what to have for dinner.

A real-estate quant in the cloud

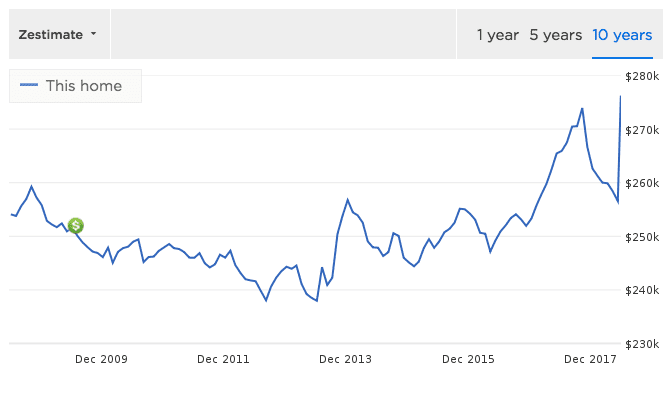

People on Bumble are looking for dates. People who go to Zillow are checking out real estate, and today, the website can tell you the estimated values of some 100 million homes. Curious about the current value of the place where you grew up, or the home you bought a decade ago? You can see a Zestimate, as they call the feature, of its value. But that Zestimate is not a back-of-the-envelope calculation: An AI system comprised of over seven million machine learning models powers the service, and they say it has an error rate of just about 4.3 percent.

The system “discovers its own patterns for how to value real estate,” says Stan Humphries, Zillow’s chief analytics officer.

Unsurprisingly, Zillow uses cloud computing to power the real-estate modeling. But when it was just getting off the ground before its launch in early 2006, and they were first creating the Zestimate feature, they started small. Back then, it was working on calculating the Zestimates for some 43 million homes, but also wanted to create a 15-year-long history for each of those home values—an endeavour that meant creating about 7.7 billion data points. To do that, the company moved a bunch of computers to the office game room, put them on a couple ping-pong tables, and connected the machines together so they could work in parallel, Humphries remembers. There were “orange extension cords snaking through the office,” he says.

Those game room computing days are long in the past. Now they’re working on a new feature: using artificial intelligence to analyze photographs of the homes on Zillow to figure out how nice they are. That’s because the difference between a modern kitchen and a crumby one can affect a home’s value. Here’s how it works: a type of AI called a neural network analyzes the photos to figure out what kind of scene it’s looking at, like a kitchen, bathroom, or bedroom. Then, a second neural network estimates the room’s quality.

The new feature—rolled out now in Washington’s King County— has boosted the accuracy of their home price estimates by 15 percent, according to Zillow.

“That’s a great example of AI,” says Humphries, “because it is something that humans have typically been very good at, and computers are not.” In other words: estimating the value of millions of homes seems like an obvious place for a computer to help, whereas humans naturally can check out photos, and guess at quality, on their own. But now the computers are helping there, too. “We’re now trying to teach computers that same sensibility that a person has,” he says. “Teaching a computer to appreciate curb appeal is truly artificial intelligence.”

So while users never interact with the AI like they would if a Google-Assistant-powered phone call came their way, they’re still subject to a computer’s real-estate sensibilities.

Hot dog, or not hot dog?

Zillow is not the only place working on teaching computers to understand what’s in photos. At Yelp, people upload some 100,000 photos to the site everyday, according to the company. To organize all those taco, burger, donut, sushi, and dessert pics, the team turned to artificial intelligence.

For instance, consider Carmine’s Italian restaurant in Times Square, Manhattan. There are over 1,800 food photos on its Yelp page, including oodles of pasta pics as well as salad shots and dessert images. The fact that the photos are all conveniently sorted by food type is thanks to AI.

But before they can sort food pictures by category, they need to identify what is in the photo in the first place. A high-level network classifies those photos by what’s in them: whether it a shot of food, the menu, drinks, the place’s interior, or exterior, explains Matt Geddie, a product manager at Yelp.

Then another network identifies what type of food is in those grub-filled shots. Geddie rattles off the different food types it can identify, a list that includes burritos, pizza, burgers, and cupcakes. And also, hot dogs. “Which we had before the Silicon Valley episode that made that classification popular,” Geddie points out. (In the HBO show Silicon Valley, a character named Jian-Yang invents an app that successfully identifies a food item as either a hot dog, or not a hot dog—a binary is-it-or-isn’t-it-a-sausage system.)

So AI and machine learning today is recognizing hot dogs, estimating real estate prices, and keeping cheesy shirtless bathroom mirror selfies off a dating app. “The state of AI is that we have AI systems that are very good at doing very narrow things,” says Pedro Domingos, author of the book The Master Algorithm and a professor of computer science and engineering at the University of Washington. “But what really eludes us, despite 50 years of research, is integrating all those things into one general intelligence—which is what humans are really good at, and we really are not that close to having.”

For now, enjoy looking at those pasta pics and real estate prices, and don’t worry too much about machines taking over the world—at least not just yet.